Tricky that SELECT statement generates REDO

After gaining theoretical knowledge from the Oracle documents or from Oracle university training every DBA will be under presumption that only DML operations in the database generates REDO segments. But practically it is not completely true. On few occasions you can identify that SELECT statements generates REDO segments in the instance. Next question you might get is whether REDO generated by SELECT statement are pushed into REDO log file and used for recovery operations or not. This blog will explain why SELECT statement generates REDO and eventually let you know if this REDO is used elsewhere.

Examples:

- SELECT statement after inserting – Huge data

- SELECT statement after inserting – Small data

Considerations:

- Database Buffer cache is of fixed size 300MB – Not ideal though.

- Explain plan statistics are used to demonstrate the concept.

- SELECT statement after inserting – Huge data

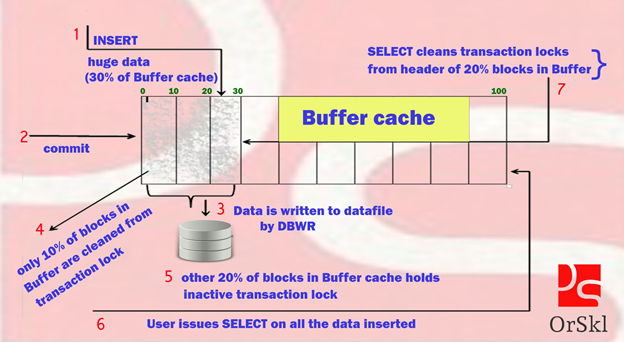

I will be referring to the image below to explain the behavior of SELECT statement just after heavy INSERT operation.

Explanation for each numbered stages in the image:

- A user performs a HUGE insert operation of 90MB data on a table (30% of the total buffer cache size).

- These data blocks headers in the buffer cache will have transaction lock and its details.

SQL> create table scott.table1 as select * from dba_objects;

Table created.

SQL> insert into scott.table1 select * from dba_objects;

74638 rows created.

SQL>/

74638 rows created.

SQL>/

74638 rows created.

- To end the transaction, User issues COMMIT.

SQL> commit;

Commit complete.

- As a response to COMMIT, these data blocks are pushed to datafiles and are physically saved in the disk.

- This operation is done by DBWR background process.

- Data blocks occupying only 10% of buffer cache which are part of this transaction are cleaned out.

- Buffer clean out is the operation of removing transaction locks from the headers of data blocks in the cache.

- Rest of the 20% of data blocks in the buffer cache which are part of this transaction still holds details of INACTIVE transaction locks.

- This is referred to COMMIT DELAY some times. To let the commit perform faster database doesn’t clean up all the headers of data blocks in the buffer cache if it occupies more than 10 to 15% of buffer cache size.

- Consider User immediately fires a SELECT statement after the COMMIT.

SQL> select * from scott.TABLE1 where owner=’SCOTT’;

162 rows selected.

- As data blocks required by this SELECT statement are already in the buffer cache, instance will not perform any I/O operation.

- Instance while accessing first 10% of blocks don’t find any difficulty, but to access other 20% of blocks it identifies inactive transaction lock in the headers and cleans them.

- Now this cleaning the inactive transaction lock from the headers of buffer cache results in GENERATING REDO.

Statistics

———————————————————-

9 recursive calls2 db block gets

9 recursive calls2 db block gets

16848 consistent gets

1063 physical reads

497420 redo size

12079 bytes sent via SQL*Net to client

529 bytes received via SQL*Net from client

12 SQL*Net roundtrips to/from client

0 sorts (memory)

0 sorts (disk)

162 rows processed

- Re-run the same SELECT query and you don’t find REDO generation as BLOCKS are now cleaned.

SQL> select * from scott.TABLE1 where owner=’SCOTT’;

162 rows selected.

162 rows selected.

Statistics

———————————————————-

4 recursive calls

2 db block gets

9938 consistent gets

0 physical reads

0 redo size

12079 bytes sent via SQL*Net to client

529 bytes received via SQL*Net from client

12 SQL*Net roundtrips to/from client

0 sorts (memory)

0 sorts (disk)

162 rows processed

2. SELECT statement after inserting – Small data

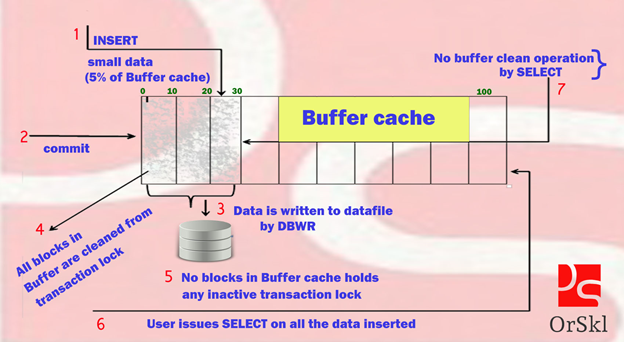

I will be referring to the image below to explain the behavior of SELECT statement just after small INSERT operation.

Explanation for each numbered stages in the image:

- A user performs a SMALL insert operation of 15MB data on a table (5% of the total buffer cache size).

- These data blocks headers in the buffer cache will have transaction lock and its details.

SQL> create table scott.table2 as select * from dba_tables where owner=’SCOTT’;

Table created.

Table created.

SQL> insert into scott.table2 select * from dba_tables where owner=’SCOTT’;

15 rows created.

15 rows created.

- To end the transaction, User issues COMMIT.

- As a response to COMMIT, these data blocks are pushed to datafiles and are physically saved in the disk.

- This operation is done by DBWR background process.

- All the data blocks in buffer cache which are part of this transaction are cleaned out.

- Buffer clean out is the operation of removing transaction locks from the headers of data blocks in the cache.

- There are NO data blocks in the buffer cache which are part of this transaction holding details of INACTIVE transaction locks.

- There is NO COMMIT DELAY now.

- Consider User immediately fires a SELECT statement after the COMMIT.

SQL> select * from scott.table2 where table_name like ‘T%’;

13 rows selected.

13 rows selected.

- As data blocks required by this SELECT statement are already in the buffer cache, instance will not perform any I/O operation.

- There is NO action of cleaning up headers of these data blocks.

- NO REDO generation for this SELECT statement.

Statistics

———————————————————-

5 recursive calls0 db block gets

16 consistent gets

1 physical reads

0 redo size

4644 bytes sent via SQL*Net to client

419 bytes received via SQL*Net from client

2 SQL*Net roundtrips to/from client

0 sorts (memory)

0 sorts (disk)

How GOOD is this REDO?

- The REDO generated in the above scenario doesn’t have any details on DATA modifications.

- It writes the details in REDO buffer cache but it turns out to be DIRTY buffer.

- These details are also pushed into REDO LOG FILE.

- But this REDO is not at all useful for database RECOVERY as these are the modifications to blocks in buffer rather than modifications to blocks in datafile.

Conclusion:

- It is true that SELECT statements generate REDO on few occasions.

- OLAP systems will mostly face these situations. After heavy data modifications, it is recommended to run important reports so that end user doesn’t face any performance issue because of BUFFER CLEAN operation.

- REDO generated by SELECT statement is not useful for any RECOVERY purposes.

- Small amount of data modifications per transaction will avoid COMMIT DELAY.

WHY SELECT Statement Generates Redo?

Background:

I was working on one scenario regarding Oracle Statistics (Tracing statistics like sorts, consistent gets etc). So I created one table

Please Note for testing purpose: Db Block Size is 8k

Buffer Cache Size is 4M

SQL> create table test(x char(2000),y char(2000),z char(2000));

Every row will be 6k so it means only one row in one block.

Then for checking the statistics I fired auto-trace command as stated below

SQL> set autotrace traceonly statistics

Then I inserted the 10000 rows.

SQL> insert into test select 'x','y','z' from all_objects where rownum<=10000;

10000 rows created.

Statistics

------------------------------

2274 recursive calls

46503 db block gets

12033 consistent gets

7 physical reads

66050648 redo size

821 bytes sent via SQL*Net to client

822 bytes received via SQL*Net from client

3 SQL*Net roundtrips to/from client

1 sorts (memory)

0 sorts (disk)

10000 rows processed

Then I finally commit the data.

SQL> commit;

Commit complete.

SQL> select * from test;

10000 rows selected.

Statistics

------------------------------

1 recursive calls

0 db block gets

13669 consistent gets

0 physical reads

263680 redo size--------------------à“2636

60514555 bytes sent via SQL*Net to client

7846 bytes received via SQL*Net from client

668 SQL*Net roundtrips to/from client

0 sorts (memory)

0 sorts (disk)

10000 rows processed

So what I see here is only statistics which looks ok to me. But after double checking above result, I found “263680 redo size”. This is suprising

What went wrong as I am the only user in Db.

So fired the select statement again

SQL> select * from test ;

10000 rows selected.

Statistics

------------------------------

0 recursive calls

0 db block gets

10008 consistent gets

0 physical reads

0 redo size

60514555 bytes sent via SQL*Net to client

7846 bytes received via SQL*Net from client

668 SQL*Net roundtrips to/from client

0 sorts (memory)

0 sorts (disk)

10000 rows processed

Redo size is again 0(0 redo size)

I took a step ahead to find” Why Select Statement generates redo??” I reached one of the internals of Oracle” Block Cleanout”

What is Block Cleanout and Why select statement generated redo: Whenever one fire any dml then oracle automatically acquire lock. In oracle data locks are actually the attributes of the data and they are stored in block header. The transaction will simply go to the data and locks it( if it is not locked). When a row is locked by a transaction, that information is placed in the block header where the row is located. When another transaction wishes to acquire the lock on the same row, it has to travel to the block containing the row anyway, and upon reaching there, it can easily identify that the row is locked, from the block header.

So what portion of the block header contains information on locking? It is a simple data structure called Interested Transaction List (ITL), a linked list data structure that maintains information on transaction address and row-id. What basically it contains is “TRANSACTION ID” which is unique to transactions and represents roll back segment number, slot number, sequence number.

When the transaction is released ID is left behind. So whenever another session comes to lock the same block which was released earlier checks this ID and see the status whether the transaction is active or not. If not then lock it else wait.

In order to reach our subject we need to understand one of the step of COMMIT??

One of the steps of COMMIT is to revisit our blocks if they are still in SGA, if they are accessible (no one modifying them), and then clean out the transaction information known as COMMIT CLEANOUT. Database Commit can clean out the blocks so that “select” which is just for reading will have not to clean it out.

***In Oracle---commit is related with commit list which is 20 blocks long and Oracle will allocate as many as of these list till it needs up to a point. If the sum of the blocks we modify exceeds 10% of the buffer cache size. *** For e.g. ------if blocks in cache is 5000 so oracle will maintain list of 500 blocks. Upon commit, oracle will process 500 blocks for clean out( remove transaction and lock info) and if the blocks are more than this then commit will delayed it and skips it(doesn’t clean them).

In this case when any other session comes say a ‘select’ statement on the same blocks then it will check the block header for all the blocks and tries to clean out the transaction and lock info if present: and if transaction information is still there (but not active) then it will generate redo information (because block become dirty as select statement is cleaning info from the blocks)

Do remember if blocks are perfectly inside 10% of buffer cache (as commit cleanout says) then there will be no delayed cleanout i.e. no redo generation

See below

SQL> set autotrace traceonly statistics

SQL> insert into test select 'x','y','z' from all_objects where rownum<=500;

500 rows created.

Statistics

------------------------------

2155 recursive calls

4863 db block gets

1755 consistent gets

5 physical reads

6615428 redo size

830 bytes sent via SQL*Net to client

821 bytes received via SQL*Net from client

3 SQL*Net roundtrips to/from client

1 sorts (memory)

0 sorts (disk)

500 rows processed

SQL> commit;

Commit complete.

SQL> select * from test;

500 rows selected.

Statistics

------------------------------

1 recursive calls

0 db block gets

1005 consistent gets

0 physical reads

0 redo size---------- (No redo generated. Commit already clean the information)

6051955 bytes sent via SQL*Net to client

1246 bytes received via SQL*Net from client

68 SQL*Net roundtrips to/from client

0 sorts (memory)

0 sorts (disk)

500 rows processed

=========================================================================================

Comments

Post a Comment

Oracle DBA Information